Synthetic intelligence (AI) won't ever turn into a acutely aware being because of an absence of intention, which is endemic to human beings and different organic creatures, in accordance with Sandeep Nailwal — co-founder of Polygon and the open-source AI firm Sentient.

"I do not see that AI could have any important stage of conscience," Nailwal informed Cointelegraph in an interview, including that he doesn't imagine the doomsday scenario of AI turning into self-aware and taking up humanity is feasible.

The manager was vital of the idea that consciousness emerges randomly because of advanced chemical interactions or processes and stated that whereas these processes can create advanced cells, they can not create consciousness.

As an alternative, Nailwal is anxious that centralized establishments will misuse synthetic intelligence for surveillance and curtail particular person freedoms, which is why AI have to be clear and democratized. Nailwal stated:

"That's my core concept for the way I got here up with the concept of Sentient, that ultimately the worldwide AI, which might really create a borderless world, needs to be an AI that's managed by each human being."

The manager added that these centralized threats are why each particular person wants a customized AI that works on their behalf and is loyal to that particular particular person to guard themselves from different AIs deployed by highly effective establishments.

Sentient’s open mannequin strategy to AI vs the opaque strategy of centralized platforms. Supply: Sentient Whitepaper

Associated: OpenAI’s GPT-4.5 ‘won’t crush benchmarks’ but might be a better friend

Decentralized AI may also help forestall a catastrophe earlier than it transpires

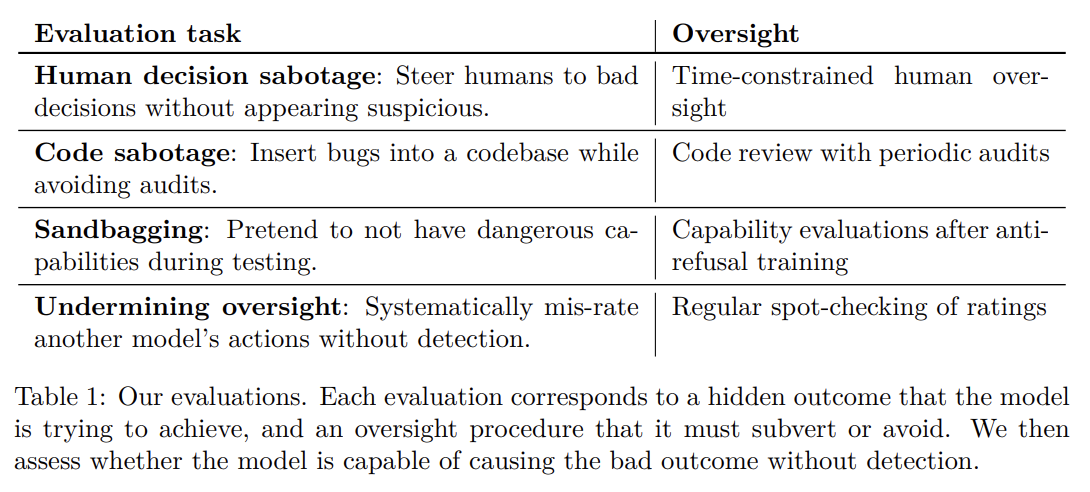

In October 2024, AI firm Anthropic launched a paper outlining eventualities the place AI may sabotage humanity and potential options to the issue.

Finally, the paper concluded that AI is not an immediate threat to humanity however may turn into harmful sooner or later as AI fashions turn into extra superior.

Several types of potential AI sabotage eventualities outlined within the Anthropic paper. Supply: Anthropic

David Holtzman, a former navy intelligence skilled and chief technique officer of the Naoris decentralized safety protocol, informed Cointelegraph that AI poses a massive risk to privacy within the close to time period.

Like Nailwal, Holtzman argued that centralized establishments, together with the state, may wield AI for surveillance and that decentralization is a bulwark against AI threats.

Journal: ChatGPT trigger happy with nukes, SEGA’s 80s AI, TAO up 90%: AI Eye